Introduction

Until recently, “Test Data Management” (TDM) was little more than an improvised mix of manual analysis, hand-rolled scripts, and good intentions—often leaving security and data-integrity gaps, and no reliable way to prove the job was done correctly.

Today, stricter privacy regulations (GDPR, APRA, HIPAA) and the sheer volume and complexity of enterprise data have made these ad hoc approaches untenable. Modern delivery pipelines demand test data that is automated, compliant, and fully traceable.

With a growing list of vendors claiming to solve this challenge, the conversation has shifted from “What is TDM?” to “Which platform will reduce test waste, accelerate delivery, and satisfy auditors?”

Below, TEM Dot compares the leading solutions across seven essential TDM capability areas.

Vendors Assessed

-

Broadcom (CA Test Data Manager)

-

BMC (Compuware)

-

Delphix

-

Enov8

-

GenRocket

-

IBM Optim

-

Informatica TDM

-

K2View

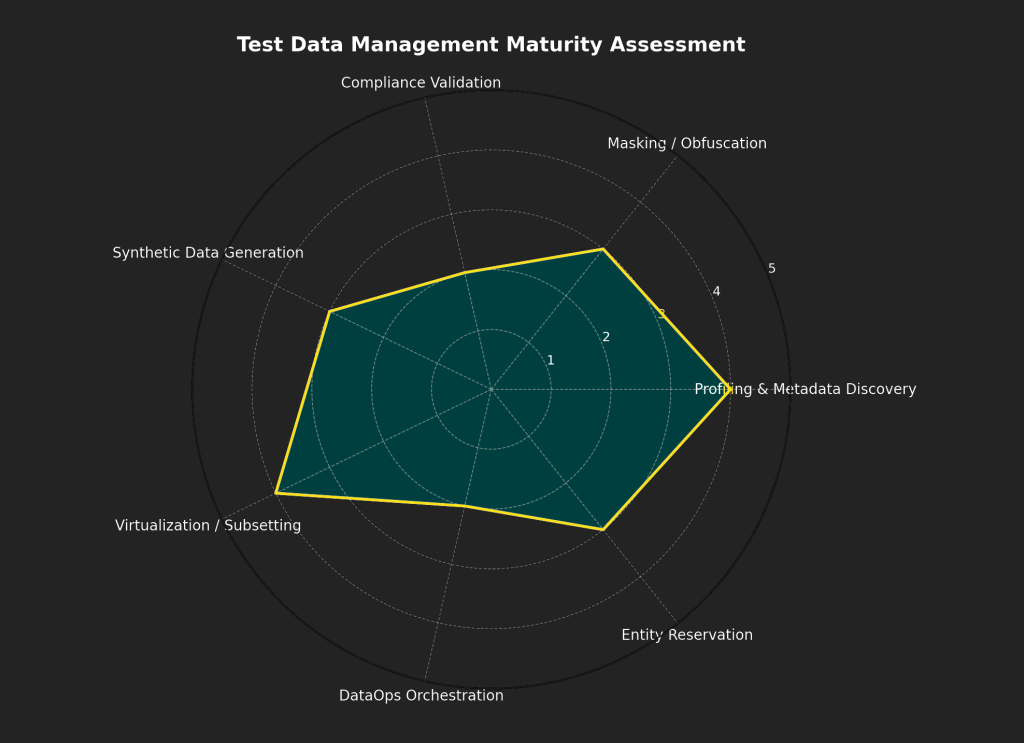

Core Test Data Management Capability Areas

1. Data Profiling & Metadata Discovery

The ability to automatically scan, analyze, and catalog the structure, relationships, and content of enterprise data sources. This includes identifying sensitive data, understanding schema dependencies, and generating metadata that supports masking, subsetting, and compliance operations.

2. Data Masking / Obfuscation

Techniques used to irreversibly transform sensitive data into anonymized or tokenized equivalents while retaining referential integrity. This protects privacy and security while allowing realistic testing and analytics on non-production environments.

3. Compliance Validation

The capability to verify that data transformations (e.g., masking, subsetting) comply with data protection regulations (e.g., GDPR, HIPAA, APRA). This may include rule-based validation, exception reporting, and traceability mechanisms to demonstrate regulatory conformity.

4. Synthetic Data Generation

The creation of entirely artificial but realistic test data that does not originate from production sources. Useful for scenarios where real data cannot be used due to privacy or security concerns. Advanced solutions support rule-driven generation, referential integrity, and test case variation.

5. Database Virtualization and/or Data Subsetting

Enables rapid provisioning of lightweight, virtual copies of databases or targeted subsets of production data. This capability reduces infrastructure usage and supports parallel test cycles, while maintaining data consistency and integrity.

6. DataOps Orchestration & Pipelines

Automates and coordinates the end-to-end flow of test data activities — including provisioning, masking, validation, and teardown — across environments. Integrates with CI/CD pipelines to ensure test data is aligned with agile and DevOps practices.

7. Test Data Entity Reservation

Allows users or teams to search & reserve specific datasets, record groups, or masked identities for exclusive use during a test cycle. Prevents data conflicts and duplication, especially in multi-stream development and testing environments.

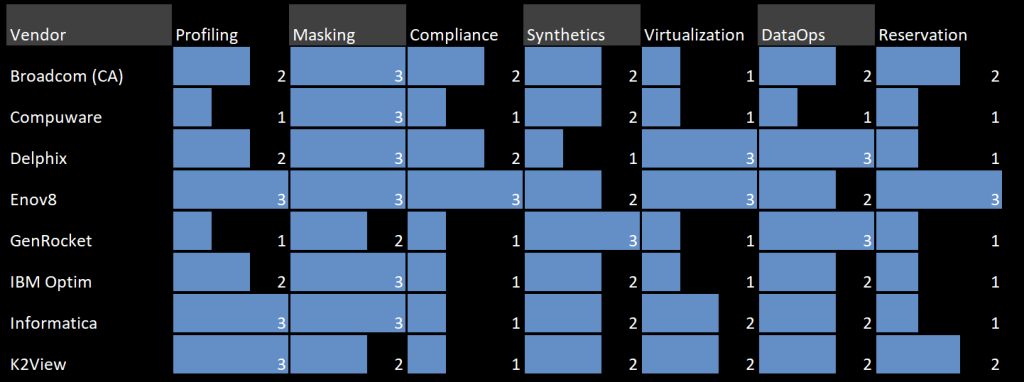

Breakdown by TDM Platfom (as of 2025)

Broadcom (CA Test Data Manager) – Scorecard

Overview:

Broadcom’s CA TDM offers mature data masking and synthetic generation capabilities. It supports automated test data delivery and compliance workflows, although it’s less competitive in DevOps orchestration and audit insights.

Website: www.broadcom.com

Score Breakdown:

- Data Profiling & Metadata Discovery: 2/3

- Data Masking / Obfuscation: 3/3

- Compliance Validation: 2/3

- Synthetic Data Generation: 2/3

- Database Virtualization or Subsetting: 1/3

- DataOps Orchestration & Pipelines: 2/3

- Data Reservation: 2/3

Total Score: 14 / 21

Compuware – Scorecard

Overview:

Compuware, now part of BMC, targets mainframe test data operations with strong legacy data masking. Strengths is its native Mainframe support. However, it offers minimal support for modern DevOps, compliance validation, and test data orchestration.

Website: www.bmc.com

Score Breakdown:

- Data Profiling & Metadata Discovery: 1/3

- Data Masking / Obfuscation: 3/3

- Compliance Validation: 1/3

- Synthetic Data Generation: 1/3

- Database Virtualization or Subsetting: 2/3

- DataOps Orchestration & Pipelines: 1/3

- Data Reservation: 1/3

Total Score: 10 / 21

Delphix – Scorecard

Overview:

Delphix is known for high-speed data virtualization and industry-leading masking features. It supports full CI/CD integration and strong automation but lacks native synthetic data generation and comprehensive compliance oversight.

Website: www.delphix.com

Score Breakdown:

- Data Profiling & Metadata Discovery: 2/3

- Data Masking / Obfuscation: 3/3

- Compliance Validation: 2/3

- Synthetic Data Generation: 1/3

- Database Virtualization or Subsetting: 3/3

- DataOps Orchestration & Pipelines: 3/3

- Data Reservation: 1/3

Total Score: 15 / 21

Enov8 – Scorecard

Overview:

enov8 offers a complete enterprise test data management and environment orchestration suite. It uniquely balances compliance validation, automation, and full traceability, making it the most feature-complete solution in this comparison.

Website: www.enov8.com

Score Breakdown:

- Data Profiling & Metadata Discovery: 3/3

- Data Masking / Obfuscation: 3/3

- Compliance Validation: 3/3

- Synthetic Data Generation: 2/3

- Database Virtualization or Subsetting: 3/3

- DataOps Orchestration & Pipelines: 2/3

- Data Reservation: 3/3

Total Score: 19 / 21

GenRocket – Scorecard

Overview:

GenRocket delivers high-performance synthetic data generation with configurable rule engines. It recently introduced basic masking and orchestration support, but still lacks strong compliance controls and reservation features.

Website: www.genrocket.com

Score Breakdown:

- Data Profiling & Metadata Discovery: 1/3

- Data Masking / Obfuscation: 2/3

- Compliance Validation: 1/3

- Synthetic Data Generation: 3/3

- Database Virtualization or Subsetting: 1/3

- DataOps Orchestration & Pipelines: 3/3

- Data Reservation: 1/3

Total Score: 12 / 21

IBM Optim – Scorecard

Overview:

IBM Optim remains a trusted solution for enterprises managing sensitive structured data. Its strength lies in masking and subsetting across legacy systems, though its synthetic capabilities and DevOps alignment remain underdeveloped.

Website: www.ibm.com/products/optim

Score Breakdown:

- Data Profiling & Metadata Discovery: 2/3

- Data Masking / Obfuscation: 3/3

- Compliance Validation: 1/3

- Synthetic Data Generation: 2/3

- Database Virtualization or Subsetting: 1/3

- DataOps Orchestration & Pipelines: 2/3

- Data Reservation: 1/3

Total Score: 12 / 21

Informatica TDM – Scorecard

Overview:

Informatica provides a broad enterprise data management platform, with robust discovery and masking features. Its test data automation and synthetic generation capabilities are solid, but audit support and reservation remain light.

Website: www.informatica.com

Score Breakdown:

- Data Profiling & Metadata Discovery: 3/3

- Data Masking / Obfuscation: 3/3

- Compliance Validation: 1/3

- Synthetic Data Generation: 2/3

- Database Virtualization or Subsetting: 2/3

- DataOps Orchestration & Pipelines: 2/3

- Data Reservation: 1/3

Total Score: 14 / 21

K2View – Scorecard

Overview:

K2View combines micro-database architecture with data masking, real-time synthetic generation, and DevOps-friendly orchestration. It stands out in agility and automation but offers moderate compliance and profiling capabilities.

Website: www.k2view.com

Score Breakdown:

- Data Profiling & Metadata Discovery: 3/3

- Data Masking / Obfuscation: 2/3

- Compliance Validation: 1/3

- Synthetic Data Generation: 2/3

- Database Virtualization or Subsetting: 2/3

- DataOps Orchestration & Pipelines: 2/3

- Data Reservation: 2/3

Total Score: 14 / 21

Overall Vendor Scorecard – Test Data Management

🏆 Top Performers in Test Data Management (2025)

Our Panel’s Top 3 Picks

1. Enov8

Strengths:

-

Comprehensive capabilities across profiling, masking, synthetics, virtualization, DataOps, and test data reservation.

-

A one stop shop for DataSec, DataOps and platform also has complete Test Environment & Release Management functionality.

-

Strong governance and orchestration features & ideal for regulated or complex enterprise environments.

Ideal For: Enterprises seeking a unified TDM and Application governance platform.

2. Delphix

Strengths:

-

Historical Industry leader in database virtualization and rapid test environment provisioning.

- Effective masking and synthetic data support tailored for DevSecOps pipelines.

Ideal For: Teams focused on delivering secure, compliant test data within CI/CD workflows.

3. Broadcom (CA Test Data Manager)

Strengths:

-

A lomg term champion in the TDM space. Proven masking and synthetic data generation capabilities, particularly for compliance-centric use cases.

-

Strong support for traditional enterprise test data delivery models.

Ideal For: Large organizations with large legacy data sets.

This scorecard reflects TEM Dot’s independent assessment across seven core enterprise criteria. It does not account for other organization-specific needs / priorities such as specialised data sources, ease of onboarding, ease of use, service support models, or pricing. If you believe any tool has been misrepresented or wish to suggest another vendor for evaluation, please contact us via our feedback form.