Executive Summary

As digital transformation accelerates, enterprise IT organizations are under increasing pressure to deliver software faster, with greater reliability and lower risk. At the heart of this effort lies the Software Development Lifecycle (SDLC) and the effective orchestration of Non-Production Environments. While many organizations rely on ServiceNow for IT Service Management (ITSM), a growing number are attempting to extend its reach into SDLC and Test Environment Management (TEM).

The rationale is often rooted in convenience and familiarity. However, this paper explores why that approach introduces significant cost, complexity, and architectural misalignment, and why enterprises should instead consider purpose-built platforms such as Enov8 or Planview.

Before we go further, consider this:

Using ServiceNow to manage your SDLC and Non-Production / Test Environments is like driving a Formula 1 car over cobblestones. You can do it, but it’s going to be expensive, uncomfortable, and you won’t get very far.

This analogy reflects the mismatch between a tool designed for stability and control (ServiceNow) and the fast-moving, experimental nature of modern software delivery.

1. ServiceNow: Strength in the Wrong Place

ServiceNow is a recognized leader in the ITSM space. Its capabilities in incident management, change control, asset tracking, and governance are well suited for Production environments. In fact, its strength lies in enforcing structure, approvals, and auditability, critical for managing live systems.

However, the SDLC is fundamentally different. It is a space defined by change, agility, and experimentation. Teams are iterating constantly, infrastructure is dynamic, and environments are frequently provisioned, decommissioned, or reconfigured to meet fast-evolving requirements. Applying a production-first tool like ServiceNow in this space imposes rigidity where flexibility is essential.

2. The Core Challenges of ServiceNow in SDLC & TEM

2.1 Rigid Workflows and Poor Agility

At its core, ServiceNow operates as a workflow-based system. Every request, change, or action is routed through predefined paths and often requires human intervention. While this is ideal for regulated Production processes, it is an impediment to the dynamic nature of Dev/Test environments. Teams often require instant environment provisioning, ad-hoc system bookings, or rapid rollback—capabilities not easily supported by ServiceNow without extensive customization.

2.2 Lack of SDLC Context

ServiceNow lacks native awareness of core SDLC concepts such as:

- System Instances and Environment Lanes

- Microservices and Service Meshes

- Release Trains and Implementation Plans

- Test Data Lifecycles and Compliance

To compensate, enterprises must engage in significant customization—developing custom apps, extending the CMDB, and integrating third-party DevOps tools. The cost of this re-architecture is high, both financially and operationally.

2.3 Limited Environment Intelligence

ServiceNow’s CMDB provides visibility of configuration items, but it is static and lacks real-time awareness. It doesn’t track environment drift, usage trends, test data readiness, or booking conflicts. Nor does it support proactive alerting for environment outages, dependency breaks, or test cycle disruptions.

2.4 Developer Friction and Shadow IT

When environments are hard to access or manage, teams look for workarounds. Spreadsheets, ad-hoc scripts, or shadow booking systems emerge—undermining governance and observability. Ironically, the use of ServiceNow to enforce control often results in less control over SDLC operations.

2.5 High Switching Costs and Vendor Lock-in

Once customized for SDLC or TEM, ServiceNow becomes a tightly coupled part of the delivery toolchain. Switching away becomes difficult and expensive, especially as custom workflows proliferate. Organizations may find themselves trapped in a tool that was never purpose-built for software delivery.

3. The Hidden Cost of Convenience

The primary driver for using ServiceNow in SDLC is perceived convenience: “We already use it, so let’s extend it.” But this short-term mindset carries long-term consequences:

- Slower time-to-market due to manual workflows

- Increased operational overhead

- Poor developer satisfaction and tool adoption

- Gaps in compliance, reporting, and automation

- A brittle architecture that hinders innovation

In effect, the decision to extend ServiceNow beyond its intended purpose creates friction at precisely the point where agility is most needed.

4. Purpose-Built Alternatives: Enov8 and Planview

Organizations seeking to modernize their SDLC environment management should consider platforms designed specifically for that domain. Two such solutions are Enov8 and Planview:

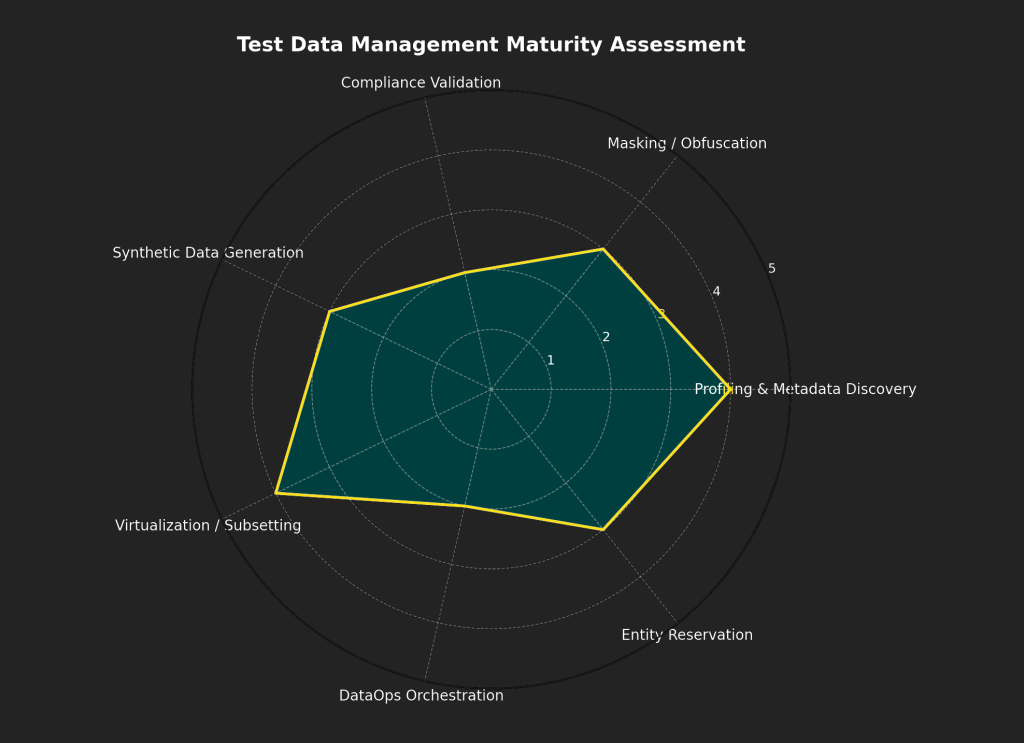

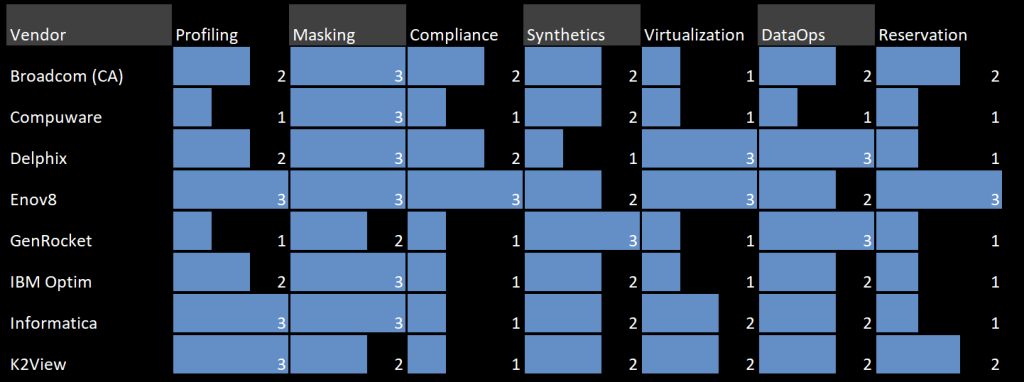

- Enov8 Environment & Release Manager brings visibility, control, and automation to the entire SDLC environment estate. It helps organizations manage system instances, microservices, test data, releases, and compliance from a single pane of glass.

- Planview (Plutora) offers robust capabilities in enterprise release orchestration and environment coordination. It supports planning, governance, and system dependency mapping across large, complex delivery portfolios.

Both solutions address the fundamental limitations of using ITSM tools for SDLC and provide the dynamic control, integration, and insight required to support continuous delivery at scale.

5. Recommendations for Technology Leaders

If you’re currently using—or considering using—ServiceNow to manage your Non-Production Environments or SDLC workflows, it may be time to pause and reassess. Ask yourself:

- Are my teams able to provision environments and data with speed?

- Do I have visibility into environment usage, conflicts, and drift?

- Am I relying on customizations that make change difficult and costly?

- Are developers working with the platform—or around it?

If the answer to these questions is concerning, the issue may not be your teams or your processes. It may be the platform itself.

Conclusion: Right Tool, Right Job

ServiceNow remains an excellent ITSM platform. But in the world of software delivery, especially in Dev/Test environments, its architecture and priorities do not align with the demands of modern SDLC.

Success in today’s enterprise delivery landscape requires more than control. It requires insight, automation, and the flexibility to support continual change. Purpose-built solutions like Enov8 and Planview offer a better path forward, one designed not for operational stability, but for delivery excellence.

The cost of convenience is real. Make sure you’re not paying for it with agility, velocity, and innovation.