Testing is an essential component of software development. Modern software developers live by the mantra, "If it isn't tested, it isn't done." There's a lot of focus on unit and acceptance testing, but organizations often slip when it comes to practical system testing. That is, many projects fail to put all the parts together and check their interactions.

Sometimes the reason for this is simple: we don't have a good testing environment.

In this post, I will discuss why this is and detail some of the side effects of missing out on that good environment. I'm going to start by creating some context, then I'll talk about fundamental practices that can be applied to the general problem, and I'll close out with a discussion on testing environments.

Why Don't We Have Good Testing Environments?

Organizations face a number of competing factors when it comes to software development and deployment. There are the first and obvious issues of building the right thing and having a stable solution that users like. Within our organizations, we are always working to balance the cost of development and the cost of operations. We find that cost minimization is hard to achieve when we have these two goals.

Further, the creation and maintenance of each environment is a complicated and time-consuming activity. Coordinating multiple environments has a multiplicative effect on cost. Employees also suffer from fatigue and distraction. Being consistent and thorough becomes more and more difficult as complexity increases. Each of these factors leads to increased cost through waste and rework.

So that's the problem. How do we fix it?

Tradition vs. the New Way

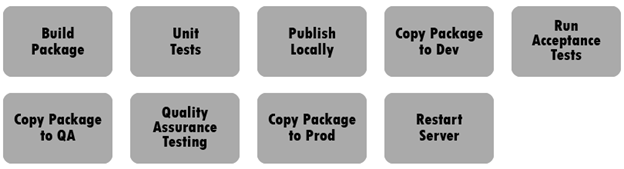

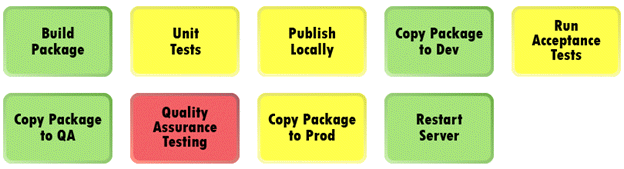

Traditionally we establish one or more test environments. Often our test environment is a smaller version of production. It may not contain the same volume of data. There may not be the same amount of network traffic. The servers might not have the same number of cores or amount of RAM.

This is not the most effective way of testing the system as a whole—we all know that. But there are always reasons that we do it.

The new way of doing things is to create a production environment and run the tests. That is, we automate the infrastructure to the degree that we can create an entire environment with a simple button click and execute our test suite.

We should be building our test environments with the new way in mind as our ideal. That said, there are still many issues that we need to deal with in order to achieve this goal. The following are the heavy hitters on our issue management list.

What Do We Need for Better Testing Environments?

First, it's essential that you carefully lay out what tools you'll need and how they will be used. Having a solid foundation to start with will be helpful later on, so don't skimp on the thinking here. Identify the capabilities you are looking for in setting up an environment. Then ensure that you have the tooling in place to support that. It's much easier to build things into the system in the first place.

Having said that, like with all things, plan only for what you know you need. Don't be overly speculative or overly ambitious. Focus on what you know to be true about the end-state and work to make that a reality.

There is an ever growing list of tools available to help with every aspect of managing your environment. First off, cloud providers universally provide working APIs for every aspect of configuration, allocation, deployment, and provisioning. On top of those APIs there are often whole SDKs and CLIs to make using them even easier. Beyond that, there are 3rd party tools that make the use of those SDKs almost transparent.

As we consider how to create a good test environment, there are a number of considerations that we need to keep in mind.

What Makes for a Good Testing Environment?

The problem you might encounter is that there are so many tools you can't keep track of them. Further, not all of the tools and components may be entirely in your control. The difficulty here is balancing a lean solution against the vast array of available tools for managing that solution. Finding a tool that is light and easy to apply is a first order knowledge problem; how do you make a decision about an ever-changing environment to which you have little control without arriving at a possibly irreconcilable conflict?

This conundrum can be resolved with a light touch. If you can create a lean solution that satisfies your platform requirements, you have a basis for discovering cost savings without sacrificing capabilities.

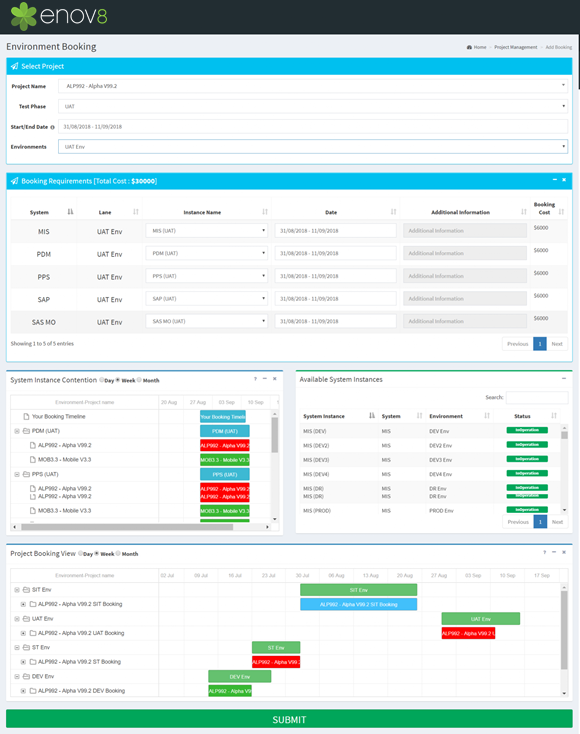

This is where Test Environment Management (TEM) comes into your plan. At least in part, TEM can help you wrangle all these components and manage their use and deployment.

For good TEM, you'll need the following components.

- Testability

Modern software is tested software. Over the last 20 years, we have changed the way we make software by adding a tremendous focus on testability. While the debate rages on about what the most effective means of testing is, one thing we can count on is: there will be tests.

Building a systems infrastructure that supports testability is absolutely necessary for a modern delivery pipeline. So when we think about the capabilities we will need, it is somewhat of a foregone conclusion that we will be able to test the infrastructure before deployment.

So our test environment itself must be testable. Validation of the environment itself is a critical feature of our solution.

- Configuration Management

If we're going to use automated releases, we have to have good configuration management. Because we will make all our environments essentially the same way, this should be a straightforward process of identifying the configuration and codifying that into our build process. When we have done this, all environments going forward will be consistent.

- Release Management

Just as we would with a production release, our test environment is going to need release management. We need to know what features and fixes are contained in a release so we understand what we should be testing. This requires us to integrate our change management system, source control tools, and release process.

- Networks

Network configuration is a concern we must also address. Each deployed environment needs to function with a minimum amount of customization. That is, just because the deployment is to a test environment doesn't mean we should have to reconfigure every service. Virtual networks, Kubernetes, Docker-Compose, and other tooling can minimize these customizations.

- Load and Volume Testing

One thing that can be difficult to emulate in our test environment is message volume. In order to test load and performance, we will need some means of creating a transaction volume similar to production. For many web applications, this isn't overly complicated, but for an IoT solution with hundreds of thousands of devices, this can be a daunting task. Careful consideration of these needs is required in planning a test environment. There will be a lot of heavy lifting.

- Incorporation of Databases

Similar to message volume, test data is often a challenge. When planning a testing environment, we need to accommodate not only the database configuration, but also the volume of data in order to ensure that we have a proper simulation of the real world.

One approach is to develop a data loader that simulates real data. This loader is executed between the environment creation and test execution steps. Of course, this can be a challenging task for large systems. An alternative is to make copies of production systems. There are several laws we need to be sure we observe when we make copies of systems related to financial and privacy regulations; data masking can be as challenging as simulated data loading.

For greenfield development, getting ahead of these issues will save you a lot of pain and suffering. In the brownfield, developing a careful plan will help you immensely; organic growth in this area yields results, but often with the consequence of interrupted or delayed deployments as issues arise and data is backfilled into the process.

- Production's Security Settings

A final issue to be considered is security. In order to get a realistic test of our system, we need to include all of the security settings our production environment has. This includes establishing users with different roles, server certificates, network restrictions, and all of the other settings and configurations we have in production. Because we have automated the deployment process, this shouldn't be difficult to do, but it does increase the number of things we need to keep track of.

What Are the Risks of Not Having a Good Testing Environment?

I've described a complex system of testing environments and automation in very abstract terms. I'll add to those generalizations an important takeaway: if you don't have a consistent, reliable, and fast testing environment, you are at great risk for failure.

I don't mean your project will fail. I mean you are at risk that any particular deployment won't go well. If you don't manage your test environment well, it's easy to can get wrapped up in the test cycle with systems that won't deploy or tests that cannot execute. You might even release bugs because your test environment is tolerant of things that production won't allow.

It is essential that your organization puts effort into the creation, growth, and maintenance of an automated testing environment in order to maximize the effectiveness of your development efforts.

So, Why TEM?

All of the above are necessary components of a modern software delivery pipeline. As organizations move toward continuous deployment, the need for automation grows, and more tooling is necessary to enable that automation. Test environments specifically need additional management in order for things to run smoothly and cost-effectively.

If you want to get into more detail, there are a number of articles and posts elsewhere on the general topic of Test Environment Management (TEM). If you are looking to dig deeper into the topic, I can suggest this article that describes the Use Case for TEM and this one discussing the cost of an inefficient test environment.

Failure to create these test environments puts the organization at risk and can be very costly. In order to create test environments with any reasonable amount of consistency, you must manage them. Therefore, test environment management needs to be a required component of your environment.

Author Rich Dammkoehler

This post was written by Rich Dammkoehler. Rich has been practicing software development for over 20 years. In the past decade, he has been a Swiss Army Knife of all things agile and a master of agile fu. Always willing to try new things, he’s worked in the manufacturing, telecommunications, insurance and banking industries. In his spare time, Rich enjoys spending time with his family in central Illinois and long-distance motorcycle riding.