Today’s IT organizations are busier than ever. They process more data, employ more people, and empower more businesses than at any other time in history. This growth in IT power and responsibility highlights the necessity that IT organizations build upon good processes. Many organizations turn to ITIL and IT service management to provide structure to their IT organization. While ITIL is a terrific framework for managing IT organizations, it’s not a silver bullet. Simply knowing about ITIL and using it to structure your IT organization isn’t enough to ensure success.

If you’re concerned that your IT service management processes might be failing your team or your business, read on. I’ve laid out five red flags that will help you detect if IT service management is failing.

#1: You’re Not Properly Scoping Changes

A common mistake among IT organizations is failing to set realistic targets for success of new processes. This can take several different forms. All of them are quite damaging to your business.

One form is scoping that may be insufficiently measurable. For instance, leadership doesn’t provide any specific targets but merely sets a goal that things will “get better.” A goal that relies on relative measures of success like “getting better” means measurement will be subjective. Subjective measurements involve the perception of stakeholders, which can be easily swayed by variations in day-to-day service. You don’t want the business to perceive your team as failing because the CTO’s laptop just happened to have a faulty hard drive the day before an organizational review of Service Management objectives.

Another form is scoping that’s too ambitious. An example might be an IT service manager setting a service level agreement that says you’ll resolve all incidents in one hour. That’s not a realistic timeline. Setting unrealistic timelines for employees degrades morale and makes those goals seem meaningless.

The opposite problem can also be trouble for an IT organization. It’s no good to set goals that won’t accomplish anything at all. Setting a goal that’s too loose means your organization won’t need to change to improve and will fail to provide value to the business.

#2: You’re Using the Wrong Tools

While ITIL is primarily focused around creating good processes for your IT organization, tooling is still very important. Regardless of your role in your business’s IT organization, you need the right information at the right time to do your job. High-quality IT service management software is regularly underrated as a part of a good IT service management implementation. It’s not just about getting the right information to the right people. It’s also about making sure that software is easy to use for business users. An effective IT service management implementation puts customers in a position to succeed, even when other parts of the IT organization are failing.

One way to identify failing tools is by looking for common pain points. Spend some time with key users of your IT service management software. Do they regularly have a hard time finding things? Is their time spent trying to make sure they don’t “mess up” the software? Does the software itself suffer regular outages?

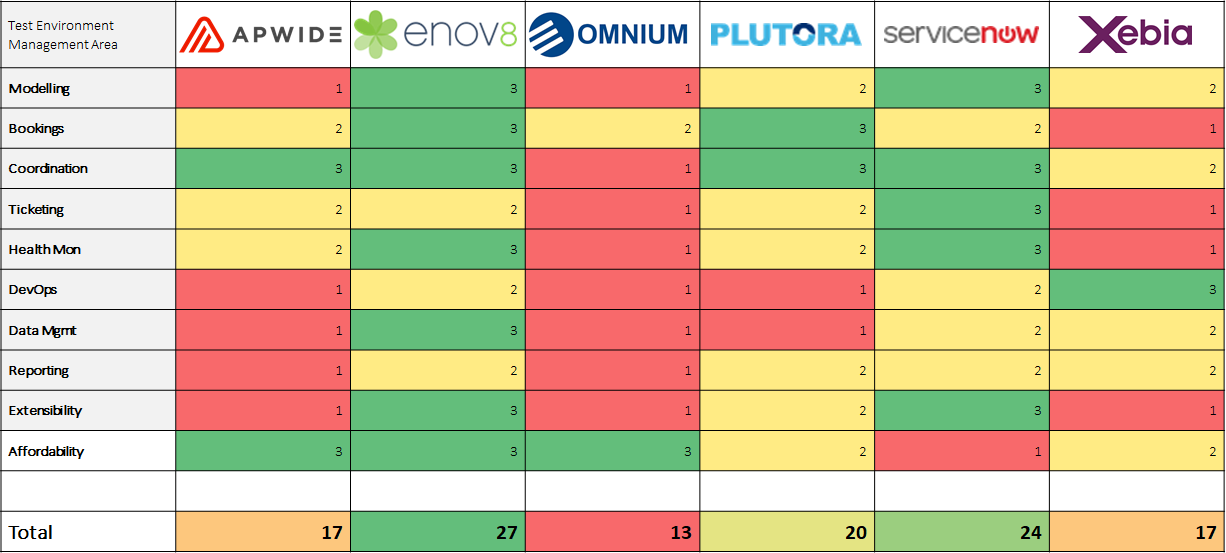

If you suspect that your IT service management tools aren’t living up to their promises, you might want to check out a new platform like Enov8. You may find that you can easily cover gaps in your processes with software instead of painful changes on the process side.

#3: You’re Thinking About Incidents Wrong

One way IT service management systems regularly fail their users is by focusing too much on fixing problems. I know, that seems like an odd response. The truth is that sometimes IT organizations can focus too much on fixing their own problems over solving problems for the business.

IT organizations regularly think about incidents as engineering problems while users think about them as an inability to get work done. I really like the analogy of a broken light bulb. An IT organization sees the broken light bulb as the problem. The business doesn’t see it that way. Instead, they feel that the problem is that they’re trying to work in the dark. Engineers might spend days trying to get a new light bulb to users while a much simpler fix would simply be to open the window shades.

IT service management works best when it focuses on delivering the results the business needs. Often times, that requires quality engineering, but it should never be the primary concern. If your team looks at a new incident and immediately jumps to figuring out the technical cause, your IT service management implementation is probably failing. Focus first on fixing the problem for the business before trying to fix the root cause.

#4: Your Processes Are Too Complicated

IT service management is about putting processes into place in order to solve problems for the business. This is a worthy goal! Unfortunately, lots of times organizations lose that vision in the day-to-day running of the team. Something goes wrong as part of incident response, so they add a new step to a process. That new step for the process solves one problem but creates another problem that isn’t immediately apparent. When a problem crops up from that new change, the team adds another step.

You can see where this is going. In trying to fix lots of little problems encountered by your IT service management implementation, you’ve created one big one. Your processes have become much too complicated. The consequences of over-complicated processes are numerous. Employees don’t know what to do while dealing with problems. Management can’t easily understand the state of any given incident’s response. The business is stuck suffering from open issues. Resist the urge to add a new part of the process every time you encounter a problem. If you’re in the habit of doing this, look for what steps of the process you can remove to simplify it.

#5: You’re Not Focused on People

ITIL books and training focus a lot on processes and systems. That’s necessary because people writing books or designing training don’t know the people in your business. But the truth of the matter is that those people are the reason for IT service management. The goal is to make their lives easier. It’s not about implementing a specific process or creating the perfect architecture.

At the end of the day, the true measure of success is whether your IT organization makes working for your business better. Successful IT service management implementations spend a lot of time thinking about their users. They talk with them and listen to the problems those users are facing. Unsuccessful implementations get bogged down by worrying about metrics and tweaks to the process.

The Hardest Part is Recognizing the Problem

Most IT service management implementations don’t fail because of malice. They don’t fail because of incompetence on the part of the team. Those implementations fail because the team didn’t recognize the warning signs of failure before it became entrenched within their system. The IT organizations pursued their implementation with the best of intentions but didn’t know they were headed toward failure. If you recognize some of these issues within your organization, it’s not too late to start fixing them. It’ll require diligence and critical thinking, but you can absolutely be successful.

Author Eric Boersma

This post was written by Eric Boersma. Eric is a software developer and development manager who’s done everything from IT security in pharmaceuticals to writing intelligence software for the US government to building international development teams for non-profits. He loves to talk about the things he’s learned along the way, and he enjoys listening to and learning from others as well.